- cross-posted to:

- pcgaming@lemmy.ca

- cross-posted to:

- pcgaming@lemmy.ca

If even half of Intel’s claims are true, this could be a big shake up in the midrange market that has been entirely abandoned by both Nvidia and AMD.

All these weird numeric names. I’m gonna build a GPU and name it Jonathan.

Pls no.

Just don’t name it Steve. You’re in for a world of troubles with GPU Steve.

Back to you, Steve.

The Arc cards actually have a really fun generational naming mechanic.

It’s RPG classes. First gen was Alchemist. Second (what the article is about) is Battlemage. I’m guessing we’re getting Cleric, Druid, etc.

It’s Celestial, so sayeth Steve

sorry, apple already took that one. call it Jeff or something.

Jonathan, what are you doing?

Hello, My name is Roger!

Intel GPU claims are NEVER true.

Meh, I ended up with an A770 for a repurposed PC and it’s been pretty solid, especially for the discounted price I got. I get that there were some driver growing pains, but I’m not in a hurry to replace that thing, it was a solid gamble.

The A770 was definitely a “fine wine” card from the start. Its raw silicon specs were way stronger than the competition, it just needed to grow into it.

This ones a bit smaller though…

Their promo benchmarks have it beating the 770, though, whcih is still a viable card at this price point. It’ll be interesting to see if that pans out on reviews with independent tests.

Not in the market for one of these, but very curious to see how the 780 fares later. Definitely good to have more midrange options.

The whole goal of battlemage was to increase utilization and cut down on wasted silicon. The overall number of transistors are almost the same. If utilization of those transistors is much more efficient then 25% should easily be doable with all of the other architectural improvements.

If there even is a 780. Rumor is it was canceled.

Yeah, I’m not sure where they are with that. Earlier leaks did have a couple of higher specs and the mid-size spec matches some of the 580 numbers. You’d also think they’d have called the 580 “780” for consistency if they weren’t doing any higher end parts. But then, it’s 2024 Intel, so whether they come later or don’t come at all is anybody’s guess.

I’ll say that it sure looks like there’s room for a bit more juice in the architecture, given the power draw and the specs. The 4070 is the sweet spot GPU for midrange, and it’s a bit too expensive, so I’d be happy to see more solid competition in that range, which is a bit harder than this 4060-ish space.

o I’d be happy to see more solid competition in that range, which is a bit harder than this 4060-ish space.

Don’t fall into the trap that every single Internet PC builder falls into.

Which is wishing for competition in the midrange… not to buy the competition, but just to drive Nvidia prices down so they don’t have to pay as much for their next Nvidia card.There’s only one way to break the monopoly and that is to stop giving money to the monopoly.

Well, I already bought an Intel Arc card on purpose, unironically and not for review, so… your move, nerds.

It hasnt been like that anymore for a while now.

If I had a dime for every time I heard that exact line.

At a certain point it’s a fool me once, fool me twice, fool me fourteen times kinda thing.

Buy nVidia forever then.

Enjoy the prices!

Honestly, my other option is Apple silicon. I’m not a price sensitive buyer.

Hell yeah 800 bucks for a basic-ass storage option

🤷♀️

For 800 bucks you get nothing worth having there.

But the point is that so far, Intel has not produced anything worth having at any price. And for GPUs, their process node troubles will make it really hard for them to compete on price or on performance.

And that overlooks what Intel is usually super crappy on that NV nailed right out of the gate: developer support.

I was purely commenting on Apple silicon. As an owner of an M4 MBP I should add. The best chip in the world can still suck as a product if it’s held hostage in this fashion. 800 bucks is what Apple charges for 1.5TB added storage.

If they double up the VRAM with a 24GB card, this would be great for a “self hosted LLM” home server.

3060, 3090 prices have been rising like crazy because Nvidia is vram gouging and AMD inexplicably refuses to compete. Even ancient P40s (double vram 1080 TIs with no display) are getting expensive. 16GB on the A770 is kinda meager, but 24GB is the point where you can fit the Qwen 2.5 32B models that are starting to perform like the big corporate API ones.

And if they could fit 48GB with new ICs… Well, it would sell like mad.

I always wondered who they were making those mid- and low-end cards with a ridiculous amount of VRAM for… It was you.

All this time I thought they were scam cards to fool people who believe that bigger number always = better.

Also “ridiculously” is relative lol.

The Llm/workstation crowd would buy a 48GB 4060 without even blinking, if that were possible. These workloads are basically completely vram constrained.

Yeah, AMD and Intel should be running high VRAM SKUs for hobbyists. I doubt it’ll cost them that much to double the RAM, and they could mark them up a bit.

I’d buy the B580 if it had 24GB RAM, at 12GB, I’ll probably give it a pass because my 6650 XT is still fine.

Don’t you need nvidia cards to run ai stuff?

Nah, ollama works w/ AMD just fine, just need a model w/ enough VRAM.

I’m guessing someone would get Intel to work as well if they had enough VRAM.

Not at all

deleted by creator

Like the 3060? And 4060 TI?

Its ostensibly because they’re “too powerful” for their vram to be cut in half (so 6GB on the 3060 and 8GB on the 4060 TI), but yes, more generally speaking these are sweetspot for vram heavy workstation/compute workloads. Local LLMs are just the most recent one.

Nvidia cuts vram at the high end to protect their server/workstation cards, AMD does it… Just because?

More like back in the day when you would see vendors slapping 1GB on a card like the Radeon 9500, when the 9800 came with 128MB.

Ah yeah those were the good old days when vendors were free to do that, before AMD/Nvidia restricted them. It wasn’t even that long ago, I remember some AMD 7970s being double VRAM.

And, again, I’d like to point out how insane this restriction is for AMD given their market struggles…

An LLM card with quicksync would be the kick I need to turn my n100 mini into a router. Right now, my only drive to move is that my storage is connected via usb. SATA is just not enough value for a whole new box. £300 for Ollama, much faster ml in immich etc and all the the transcodes I could want would be a “buy now figure the rest out later” moment.

Oh also you might look at Strix Halo from AMD in 2025?

Its IGP is beefy enough for LLMs, and it will be WAY lower power than any dGPU setup, with enough vram to be “sloppy” and run stuff in parallel with a good LLM.

*adds to wishlist

You could get that with 2x B580s in a single server I guess, though yoi could have already done that with the A770s.

… That’s nuts. I only just graduated to a mini from a pi, I didnt consider a dual GPU setup. Arbitrary budget aside, I should have added an “idle power” constraint too. Reasonable to assume that as soon as LLMs get involved all concept of “power efficient” goes out the window. Don’t mind me, just wishing for a unicorn.

Strix Halo is your unicorn, idle power should be very low (assuming AMD VCE is OK over quicksync)

I’m reserving judgement of course to see how things actually play out but I do want to throw a cheapest pc together for my nephew and that card would make a fine centerpiece.

don’t forget mini PC like a beelink with a 8745HS for instance can be pretty great for games

I have an AMD build beelink and I’m shocked what it can run.

same, I am not a gamer but developer, I replaced a bulky full ATX tower (core i7) with a mini PC Beelink SER5 (a 5600H, before 5800H was out so got a good price)

Yup. My kids pretty much only play Minecraft and Lego games, and those work fine on my 3500U. The 8745HS would be probably be way better.

Why not go for something like a steam deck or ayaneo as the main computer? I’m just wondering if that would be a good alternative for someone younger in terms of price and performance.

They might be wanting to build a proper desktop with RGB and all the jazz. While the Steam Deck does kick ass when plugged into a keyboard, mouse, and monitor, it’s not quite as impactful as a tower in terms of presence.

I use my Deck with the official dock all the time so I don’t disagree with Dindonmasker’s point that the value of a Deck is tremendous and a great alternative to building a tower.

Once you have a tower, you can start to upgrade it too. Consoles are all or nothing replacements.

You’re gonna get some framerate drops for sure. The Steam Deck internal screen is only 1280 x 800, which is how the games run so well on mobile hardware. That’s as high as a monitor from the late 90s.

Funny the Radeon RX 480 came out in 2016 at a similar price. Is that a coincidence?

Incidentally the last great generation offering a midrange GPU at a midrange price. The Nvidia 1060 was great too, and the 1080 is claimed to maybe be one of the best offers of all time. Since then everything has been overpriced.The RX 480 was later replaced by the 580 which was a slight upgrade at great value too. But then the crypto wave hit, and soon a measly 580 cost nearly $1000!!! Things have never returned quite back to normal since. Too little competition with only Nvidia and AMD.

30 series started to look like a return to good priced cards. Then crypto hit and ruined that. Now we have AI to keep that gravy train going. Once the AI hype dies down maybe we’ll see cards return to sane pricing.

I’m still rocking my RX480 and its going strong. Starting to show its age and not sure what I’ll upgrade to next, but for now it works nicely.

deleted by creator

It’s a pretty decent value when stacked up against RTX 4000 and RX 7000 GPUs.

But we’re only a month or two from the next generation of Nvidia & AMD cards.

Those companies could even shit the bed for a second generation in a row on price-to-performance improvements, and the B580 will probably just end up being in-line with those offerings.

Yeah,but by the time the 5060 is available, the tarrifs will have it at $450+.

Yeah, I’ll be curious to see how that all plays out.

Current GPU pricing still seems to have the 2019-2020 25% GPU tariff price baked-in. Note how prices didn’t drop 25% when those were rescinded.

Do Nvidia & AMD factor those in their pricing and give consumer a break? Or do they just jack up prices again and aim for mega-profits?

Hell, will the tariffs even happen? At one point, those tariffs were supposedly contingent on U.S. Federal income taxes being abolished, and being used to replace that government tax income. The income tax part seems to have been dropped from the narrative ever since the election.

Current GPU prices have consumer spending habits in mind. If people are willing to pay at a certain price, they will sell at that price

I literally just fomod myself into a 7900 XTX today because of the possibility of the price hikes.

As someone with a 6650 XT, which is a little slower than the 6700 or 4060, I doubt the increased vram, which is of course still nice, is enough to push it for 1440p. I struggle even in 1080p in some games, but I guess if you’re okay with ~40 FPS then you could go that high.

Unfortunately, if the 4060 is roughly the target here, that’s still far below what I’m interested in, which is more the upper midrange stuff (and I’d love one with 16 GB vram at least).

At least the price is much more attractive now.

Yeah, I’ve got a 6650 XT as well, and it’s been great for what I want it to be. I play in 1440p, mostly older games and indie games, and even w/ newer games it gets an acceptable framerate (and yeah, 40 is acceptable).

That said, I’m interested in playing w/ VR and LLMs, and 12 GB VRAM just ain’t it, I’d much rather get 16GB or more. However, I don’t need top-tier performance, so something like a 15-20% uplift may be enough. I play exclusively on Linux, so good Linux support is really important (and this new card seems to hit that w/ FOSS drivers), but AMD provides better cards w/ more VRAM for not that much more money (can get 6800 XT for $100 or so more). That would last at least a couple years more than the B580.

But if I decide to build a desktop for my kids, maybe I’ll try it out. $250 isn’t a bad price, it’s just not a very big uplift from what I’ve got. If they could add another 4GB VRAM and keep the price under $300, I’d be a bit more interested since that opens up entry-level LLMs.

Yeah, 40 is just not for me. I rather go 1080p and hopefully get 75+ FPS. It’s really hard to go back from that to something as choppy as 40, even 60 feels kinda bad now.

And yes, I use local LLMs too and 8 GB vram is kinda painful and limiting, though the biggest hurdle is still rocm & python which are an absolute mess. I’d love to get even more than 16 GB but that’s usually for the high end segments and gets real pricey real quick.

Linux and me playing a lot of indie titles is also why I’d still avoid Intel, even if they had something in the upper midrange, but I still would’ve loved to see some competition in that area because then AMD would have to also deliver with their prices and that’d be good for me.

Yeah, 40 isn’t great, but I play a lot of Switch games, and 40 is generally a good framerate for those. But I definitely notice it when switching between new AAA and indie/older games.

Intel could’ve earned my business by making up for mediocre performance with a ton of VRAM so I could tinker w/ LLMs between games. But no, I guess I’ll stick w/ my current card until I can’t even get 40 FPS reliably.

Dunno, realisticly speaking it is a slightly cheaper 7600, hardly a market shake-up.

And wasn’t the 7600 awful value?

Depends… its a very capable 1080p card with extremely good power efficiency.

There is the overclocked “xt” version that is generally considerd bad value as it loses the power efficiency aspect and costs significantly more for only little extra performance.

I feel like I would reach for the used market over a 7600 based on what I read. Even if there were power constraints, one could power cap a 6000 series card.

…Hence I got a 3090 when the 4000/7000 series was long out.

You are overlooking the idle power-consumption. A 7600 basically idles at 5W or so (and turns off all fans). Now think about how many hours you surf the web etc. with your PC Vs. actual gaming and you can see that this makes a big difference.

Seems like a decent card, but here are my issues:

- 12 GB RAM - not quite enough to play w/ LLMs

- a little better than my 6650 XT, but not amazingly so

- $250 - a little better than RX 7600 and RTX 4060 I guess? Probably?

If it offered more RAM (16GB or ideally 24GB) and stayed under $300, I’d be very interested because it opens up LLMs for me. Or if it had a bit better performance than my current GPU, and again stayed under $300 (any meaningful step-up is $350+ from AMD or Nvidia).

But this is just another low to mid-range card, so I guess it would be interesting for new PC builds, but not really an interesting upgrade option. So, pretty big meh to me. I guess I’ll check out independent benchmarks in case there’s something there. I am considering building a PC for my kids using old parts, so I might get this instead of reusing my old GTX 960, the board I’d use only has PCIe 3.0, so I worry performance would suffer and the GTX 960 may be a better stop-gap.

12 GB RAM - not quite enough to play w/ LLMs

Good. Not every card has to be about AI, there’s enough of those already; we need gaming cards.

Sure, I’m just saying what I would need for this card to be interesting. It’s not much better than what I have, and the extra VRAM isn’t enough to significantly change what I can do with it.

So it either needs to have better performance or more VRAM for me to be interested.

It’s a decent choice for new builds, but I don’t really think it makes sense as an upgrade for pretty much any card that exists today.

If their claims are true, I’d say this is a decent upgrade from my RX 6600 XT and I’m very likely buying one.

Sounds like a ~10% upgrade, but I’d definitely wait for independent reviews because that could be optimistic. It’s certainly priced about even with the 6600 XT.

But honestly, if you can afford an extra $100 or so, you’d be better off getting a 6800 XT. It has more VRAM and quite a bit better performance, so it should last you a bit longer.

There are some smaller Ollama Llama 3.2 models that would fit on 12GB. I’ve run some of the smaller Llama 3.1 models under 10GB on NVIDIA GPUs

Its weird that Intel/AMD seem so disinterested in the LLM self hosting market. I get its not massive, but it seems way big enough for niche SKUs like they were making for blockchain, and they are otherwise tripping over themselves to brand everything with AI.

Exactly. Nvidia’s thing is RTX, and Intel/AMD don’t seem interested in chasing that. So their thing could be high mem for LLMs and whatnot. It wouldn’t cost them that much (certainly not as much as chasing RTX), and it could grow their market share. Maybe make an extra high mem SKU with the same exact chip and increase the price a bit.

Well AMD won’t do it ostensibly because they have a high mem workstation card market to protect, but the running joke is they only sell like a dozen of those a month, lol.

Intel literally had nothing to lose though… I don’t get it. And yes, this would be a very cheap thing for them to try, just a new PCB (and firmware?) which they can absolutely afford.

They might not even need a new PCB, they might be able to just double the capacity of their mem chips. So yeah, I don’t understand why they don’t do it, it sounds like a really easy win. It probably wouldn’t add up to a ton of revenue, but it makes for a good publicity stunt, which could help a bit down the road.

AMD got a bunch of publicity w/ their 3D Cache chips, and that cost a lot more than adding a bit more memory to a GPU.

Are the double capacity GDDR6X ICs being sold yet? I thought they weren’t and “double” cards like the 4060 TI were using 2 chips per channel.

I’m really not sure, and I don’t think we have a teardown yet either, so we don’t know what capacities they’re running.

Regardless, whether it’s a new PCB or just swapping chips shouldn’t matter too much for overall costs.

Using ML upscaling does not qualify it as a 1440p card… what a poor take.

How is compatibility with older games now?

Because I’m not buying a GPU unless it works with everything.

We’ll see come launch, but even the original Arc cards work totally fine with basically all DX9 games now. Arc fell victim to half baked drivers because Intel frankly didn’t know what they were doing. That’s a few years behind them now.

Intel designed their uarch to be DX 11/12/Vulkan based and not support hardware level DX9 and older drawcalls, which is a reasonable choice for a ground-up implementation- however it does also mean that it only runs older graphics interpreters using a translation/emulation layer, turning DX9 into DX12. And driver emulation is an always imperfect science.

A lot of it will have been because half the game optimisation code was often inside the drivers.

So Intel devs may not know what they were doing, but game devs are often worse.

🤞🏼

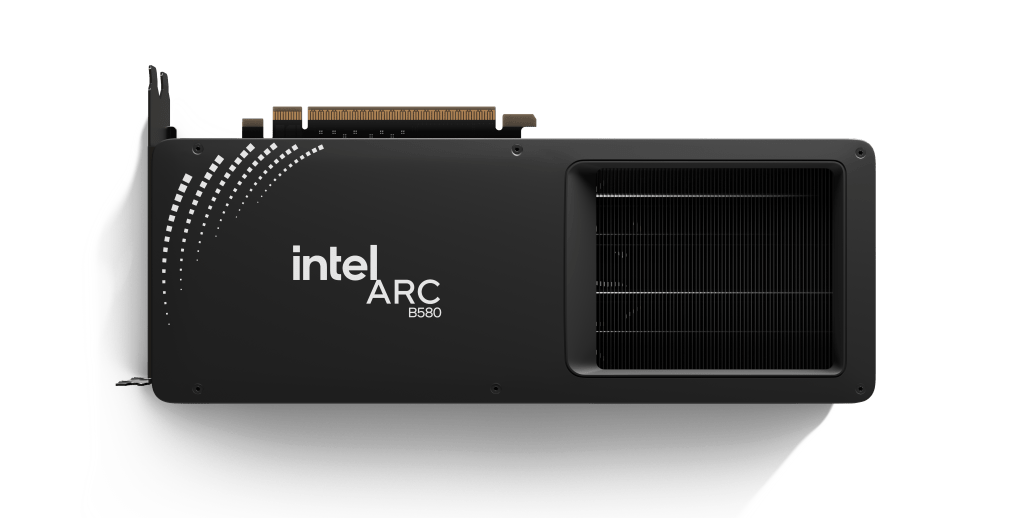

Although still behind AMD and NVIDIA on the performance front, from a design standpoint Intel is bringing their A game. The black limited edition card looks so slick.

What’s the chance of there being an B780? Rumors suggested its canceled… And they appear to be true?

How would this compare to an AMD RX580? I can’t find this in the usual charts

I compared my Vega 56 with the RX 7900 GRE, which would be a 2.5x to 3x performance upgrade. I’d imagine the RX 580 to B580 swap would be in the same ballpark.

Looking at Vega’s release reviews though, it was 40% faster than the RX 580. I assume your gains would be higher than 200%.