I made this and thought you all might enjoy it, happy hacking!

I tried running this with some output from a Wizard-Vicuna-7B-Uncensored model and it returned

('Human', 0.06035491254523517)So I don’t think that this hits the mark, to be fair, I got it to generate something really dumb but a perfect LLM detection tool will likely never exist.

Good thing is that it didn’t false positive my own words.

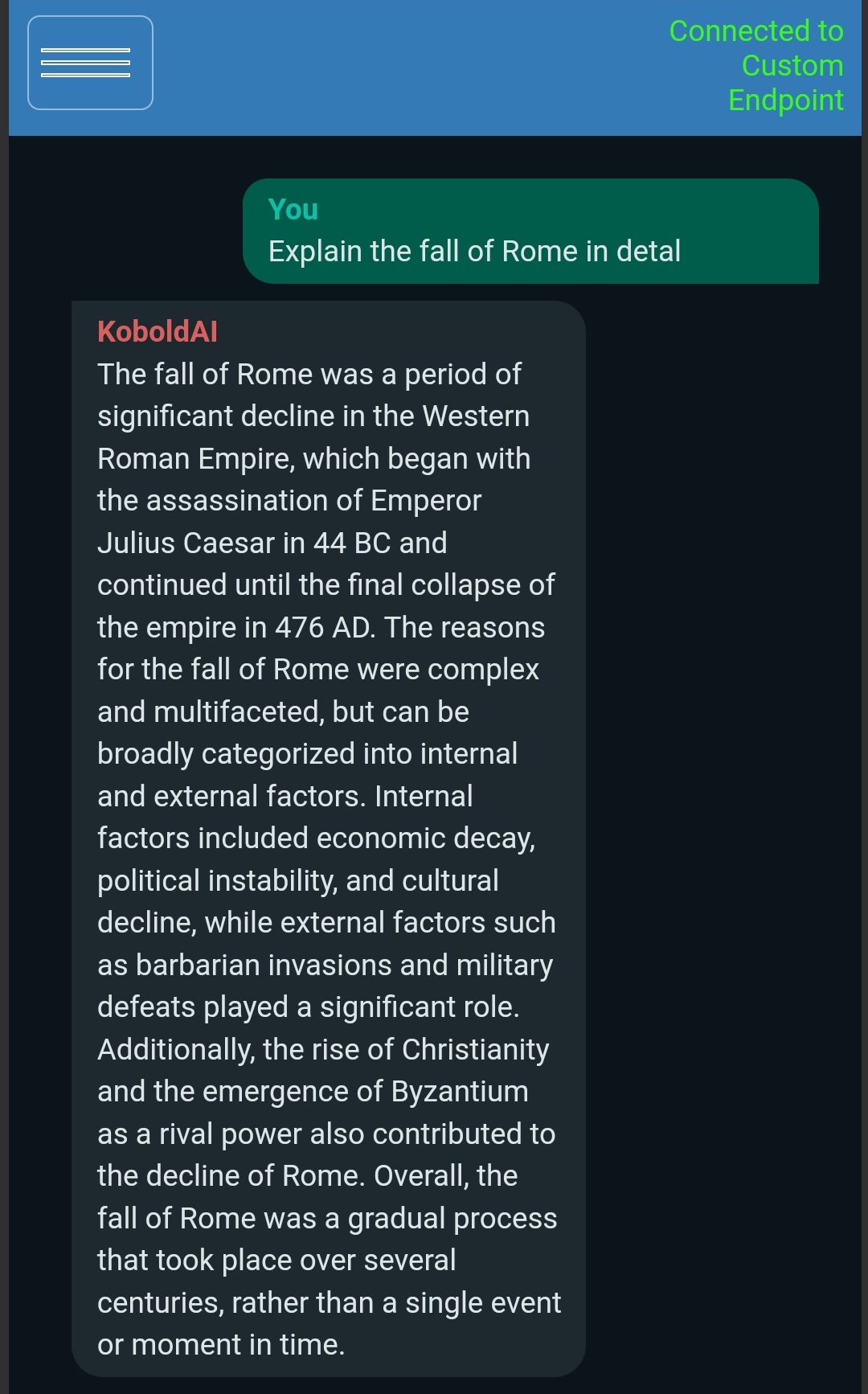

Below is the output of my LLM, there’s a decent amount of swearing so heads up

Edit:

Tried with a more sensible question and still got a false negative

('Human', 0.03917657845587952)

Lmao what the hell. That was hilarious.

That is a genius approach, though a big challenge is probably going to be to select the correct corpus

It’s not if you’re aware of Generative Adversarial Network, it’s a losing game for the detector, because anything you use to try and detect generated content would only strengthen the neural net model itself, so by making a detector, you’re making it better at evading detection. At least in language model, they are looking at how GAN can be applied in language model.