Hey all,

In the market for a GPU, would like to use Bazzite, mostly a Steam user with some SteamVR (rare), and have run into nvidia issues with Bazzite and a 3070 previously.

With the recent news on nVidia’s beta drivers and Plasma’s sync support in beta, I’m newly on the fence about switching to AMD given nvidia having a better performance to cost ratio, the power usage (big one for a compact living room system), and the fact that they have the potential for HDMI2.1 support which AMD doesn’t have a solution to yet.

What are community thoughts? I’ll probably hold out for some reports on the new drivers regardless, but wanted to check in with the hive mind.

Thanks!

Just wait and see how good those drivers are first.

AMD given nvidia having a better performance to cost ratio

When the fuck?

and the fact that they have the potential for HDMI2.1 support which AMD doesn’t have a solution to yet.

An open source solution exists for Intel, the way it works is just by a translation layer between HDMI & DisplayPort. I imagine AMD will do the same thing.

Why even use HDMI when AMD does DisplayPort 2.0 where nvidia only does 1.4?

I’d say nvidia would work fine but you need to take into account that the drivers can be a bit flakey.

A lot of displays don’t support DP unfortunately. I have an LG C2 which is perfect for desktop use and one of the more affordable OLED screens out there, and it does not support DP. The PC monitor equivalent that uses the same panel is made by Asus, but that one has a $600 dollar mark up.

There are converters these days, albeit with some minor quirks. And they typically only use DP 1.4 anyway, although that’s enough for perceptually lossless 4k@120hz + HDR.

Do they support VRR though? Last I heard that was still an issue with these converters.

Do they support VRR though? Last I heard that was still an issue with these converters.

Yes, some of them do but it can be dependent on the firmware (some of them are flashable). I will add that my display supports both Freesync over HDMI and VRR, and it’s not always clear which it is actually using under the hood, so be aware of that. You’ll need a pretty recent kernel. Definitely at least 6.3 but prefer 6.6 or later.

My experience is the adapters have much better support under Linux as well, with them being almost useless on Windows (no HDR, no VRR, and potentially no 120Hz). But it’s been a while since I’ve tested.

Here’s the adapter I use: https://www.amazon.com/Cable-Matters-102101-BLK-Computer-Adapter/dp/B08XFSLWQF/

And a long discussion thread about HDMI 2.1 and adapters used to workaround the issue: https://gitlab.freedesktop.org/drm/amd/-/issues/1417 which may be helpful if you run into issues.

EDIT: Fixed the link

Here’s the adapter I use: https://www.youtube.com/watch?v=-b6w814wXvc

Did you copy the wrong link? This was a random youtube video.

Good to hear that some adapters do work though. The lack of HDMI 2.1 basically prevented me from ever considering AMD, but if there are converters that work that certainly opens up my options.

My bad, the two clipboards on Linux still trip me up after all of these years. Anyway, I’ve added a link to the above.

Thanks! So VRR works out of the box for you or did you have to do tweaks to get it to work? The answers on the Amazon page are conflicting, with the manufacturer saying VRR is not supported but some users saying it does. Don’t know who to believe.

deleted by creator

given nvidia having a better performance to cost ratio

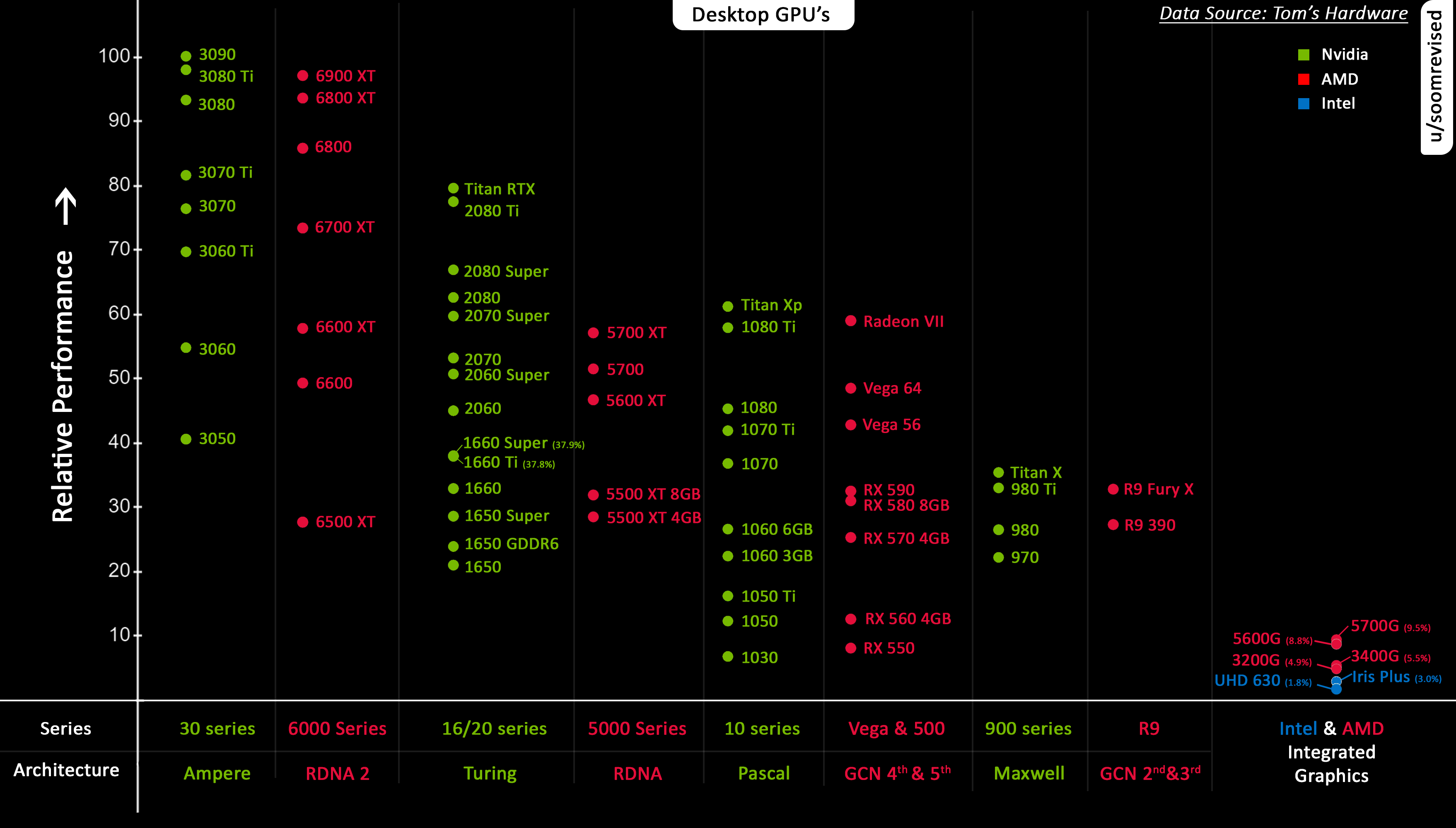

I actually agree. The 6700 XT, for example, was supposed to compete with the 3070, but instead, it barely surpassed the 3060ti in real world tests.

But I agree with your main point, and I’d trade that slight drop in performance per dollar for a better experience on Linux. I’m planning my exit strategy from Windows, and I’m still working on accepting that my Nvidia card just won’t feel as nice (until NVK is more mature).

An Nvidia 3070 costs 420 and benchmarks at 22,403 (benchmark point per dollar 53.34) An AMD 6800 costs 360 and benchmarks at 22,309 (benchmark point per dollar 61.97)

So you get a 0.4% drop in performance for a 14.3% drop in price. That is significantly more performance per dollar.

Or if you go with a 3070ti ($500 23,661 -> 47.32) vs a 7800 XT ($500 23,998 -> 47.97) you get a 1.4% performance increase for free (not really that significant I know, but still it’s free performance)

All of the numbers were taken from https://www.videocardbenchmark.net/high_end_gpus.html

My numbers were taken from a comparison of real world performance via gameplay (FPS comparisons), not artificial benchmark scores, but those prices are still really good.

6800 is better than a 3070 in both artificial benchmarks and real-world ones, and the fact that it’s cheaper means it’s certainly the better option for performance per dollar (somewhere between a 3070ti and a 3080).

Here’s an old graph I still have saved:

Nvidia sucks on Linux because they don’t care.

They really should care, because that market is growing, and Steam Deck uses Linux. Not sure which GPU decks use but… It would be cool they cared just a little bit.

Deck uses AMD

Right. So if Nvidia would even have a chance at being dominant in that market if handhelds are starting to use Linux, they’d better start caring.

It’s a relatively small niche. NVIDIA won’t beat AMD at the embedded space because they just don’t have a CPU, so they can’t make something like the Deck has.

If we do see NVIDIA in the handheld space, it’ll be either a full SOC that only goes GeForce now, or they’ll team up with Intel for a prosumer device, which will probably run Windows.

The Linux market is growing, but it’s also quite small. NVIDIA mostly cares about AI these days, so they’re far more interested in the data center and probably won’t dedicate many resources to anything else, other than Windows gaming, which is a biggest.

It would be nice, but Linux gamers still have options. AMD is tried and true, and Intel has a good track record for Linux support, so either of those would be good options. Unfortunately, this means you’re better off in the bottom too middle of the market, as top of market is still dominated by NVIDIA.

Plus Nvidia already makes the soc for the Nintendo switch which is significantly more successful than the steam deck.

Sure, but that hardware is incapable of running PC games because it runs ARM, not x86. So if it’s going into a handheld, it will either be a locked down console like Nintendo, or run something with a compat layer like Windows (unlikely because it’ll leave a lot of performance on the table until games port).

So the Switch existing has no impact on Nvidia courting Linux users.

I don’t know what you base everything you said on but it was a lot of text so it’s probably right. I feel like I’m out of my depth here. 😌👍

I’m just saying there aren’t that many Linux users and Linux handhelds (e.g. Steam Deck) use AMD APUs, so NVIDIA probably doesn’t care all that much. That’s really it.

If we want NVIDIA to care more, Linux needs more people using it. A lot more. And they need to be using it on desktop or laptop hardware.

If you want a more open platform, that works better on Linux and has better value, go AMD

given nvidia having a better performance to cost ratio,

In what part of the world? I haven’t found that to be true.

the power usage (big one for a compact living room system),

You might want to do some more homework in this area. I recall AMD having better performance/watt in the tests I read before buying, but it’s hard to declare a clear-cut winner, because it depends on the workloads you use and the specific cards you compare. AMD and Nvidia don’t have exactly equivalent models, so there’s going to be some mismatch in any comparison. In stock configurations, I think both brands were roughly in the same ballpark.

Departing from stock, some AMD users have been undervolting their cards, yielding significant power savings in exchange for slight performance loss. Since you’re planning a compact living room system, you might want to consider this. (I don’t know if Nvidia cards can do this at all, or whether their drivers allow it.)

Regardless of brand, you can also limit your frame rate to reduce power draw. I have saved 30-90 watts by doing this in various games. Not all of them benefit much from letting the GPU run as fast as it can.

and the fact that they have the potential for HDMI2.1 support which AMD doesn’t have a solution to yet.

AMD cards do support HDMI 2.1. Did you mean Fixed Rate Link features, like variable refresh rate, or uncompressed 4K@120Hz? You’re not going to get that natively with any open-source GPU driver, because the HDMI Forum refuses to allow it. Most people with VRR computer displays use DisplayPort, which doesn’t have that problem (and is better than HDMI in nearly every other way as well). If you really need those FRL features on a TV, I have read that a good DisplayPort-to-HDMI adapter will deliver them.

Another thing to consider: How much VRAM is on the AMD card vs. the Nvidia card you’re considering? I’ve found that even if a card with less VRAM does fine with most games when it’s released, it can become a painful constraint over time, leading to the cost (and waste) of an early upgrade even if the GPU itself is still fast enough for the next generation of games.

I switched from Nvidia to AMD, and have not been sorry.

I switched from a 3000 series Nvidia to a 7000 series amd and couldn’t be happier.

And yeah, dead wrong on price. I paid half the price for my AMD compared to my old Nvidia

Ethically I prefer AMD. Heck, I would sooner buy an Intel GPU than nVidia.

AMD no doubt. Back in 2017 AMD had recently open sourced their drivers I was in the market for a GPU, but you know the saying “fool me once shame on you”, AMD used to SUCK on Linux, people always seem to forget about it, so I chose an Nvidia. I don’t regret my choice, but over the years AMD proved that it had really changed, so my new GPU now is an AMD, and the experience is just so much better.

Today it might be a turning point, maybe Nvidia will change, maybe they’ll change their mind and fuck It up, they’ve done it in the past, so I wouldn’t buy an Nvidia just in case they do the right thing for once, AMD is already doing the right thing for years. Also if you go with Nvidia forget about Wayland, and every day more and more distros are going Wayland only, so if you go Nvidia you might find yourself holding a very expensive paperweight in a few years.

I would keep in mind one thing with Nvidia. Their consumer GPU market is a drop in the bucket compared to their server, cloud and AI/ML markets. AMD dedicates real effort and resources to their hardware development and they don’t lock their features to their own platform. I’m still using a vega56 and haven’t really felt a need to upgrade because things like fsr just keep my card chugging along.

I come back to lemmy and i read bullshit like this.

It’s true though…consumer graphics mean damn near nothing to them, their AI/ML profits shit all over your precious 4080’s and 4090’s in regards to straight profit for Nvidia.

Yeah but none of that means they’re giving up the consumer space. This is all just conjecture.

They rehash the same chip over and over again each generation with minimal gains, increase the power requirements and cut out third party oems like EVGA. Give it a couple generations and I would bet they’ll only be selling the 4080/90 equivalents in the future and let intel or amd have the midrange and low range market, freeing up manufacturing for their AI/ML hardware. Doesn’t matter if it’s conjecture, the writing is on the wall. People are choosing to ignore it even though the market is flooded with low end Nvidia cards made to look like their low and midrange offerings aren’t selling. It’s only a matter of time, the consumer graphics market is a second class citizen for Nvidia.

I have a 3090 and just swapped over to the beta 555 drivers and Kwin with explicit sync patches applied (the patches will be available out of the box with Kwin 6.1). Honestly, the Wayland experience is basically flawless now for most cases. The only bug I am experiencing is Steam shows some corruption in the web views on start up until I resize the window, but it’s a minor quibble in exchange for getting Wayland. I expect most of the minor remaining issues to be hammered out quickly.

Honestly, I’ve had genuinely bad experiences with AMD. I hated my unstable Vega 64 that would crash almost every day and was much happier when I finally ditched that card for my 3090. My laptop has a Radeon 680M and that would regularly have hard system hangs, broken video acceleration, etc.

Besides that, I also think being part of the AMD ecosystem is difficult at times. FSR sucks compared to DLSS, raytracing is sub-par, there’s no path reconstruction equivalent. From a compute perspective, ROCm is unstable. Even running something as simple as Darktable with ROCm would cause half of each of my photos to not render out properly. Blender with Optix is much faster than Blender with AMD HIP. If you want to do AI, forget AMD as the ecosystem has basically gone with CUDA.

And yeah, the lack of HDMI 2.1 means no 4k 120Hz VRR on a wide variety of displays. Everyone says “why not display port”, but it is tough finding a DP capable monitor with the right specs and size sometimes. For example, try finding an equivalent of an LG C2 that has DP. There’s only one, its by Asus, and it costs $600 bucks more.

It quite simply depends on which GPUs you’re looking at. Since you already have a 3070 (which works fine on modern games), I can only imagine you’re looking at the highest end GPUs available right now?

Not really. I need to replace in my desktop, but always considering the swap between machines.